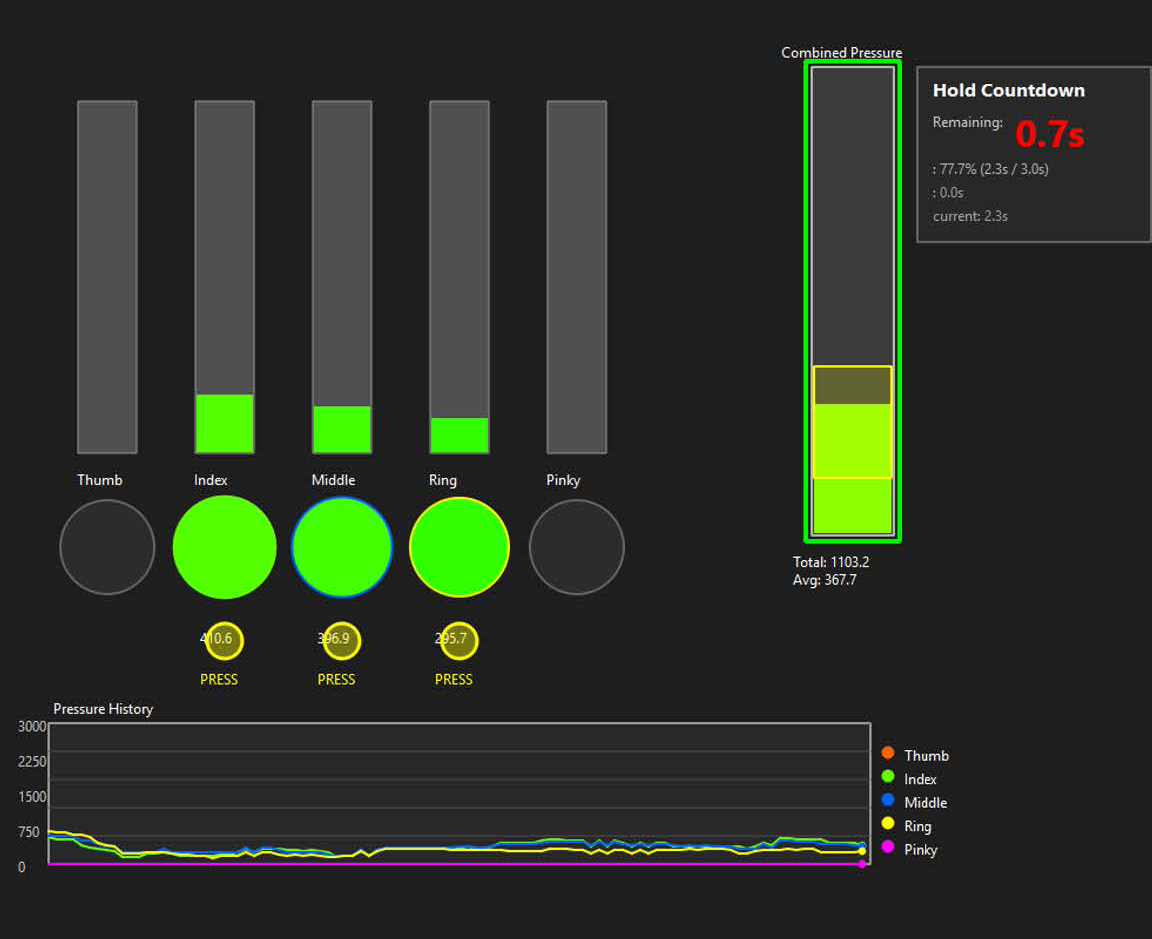

This project, along with

palmtrack, serves as the core technology for my entrepreneurial venture. As the company is just starting out, we don't have an independent website yet. Therefore, I’d like to first address some key questions about the startup:

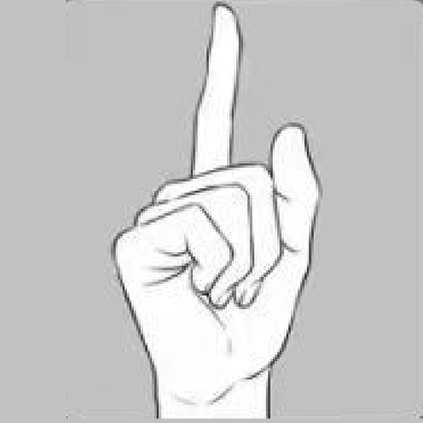

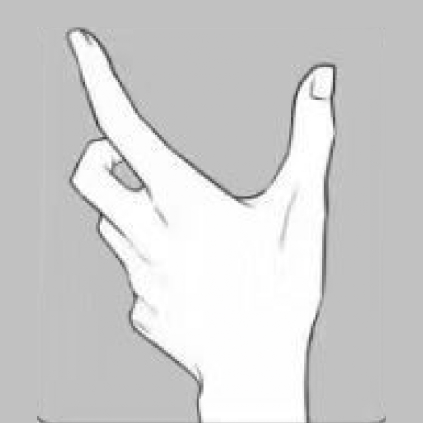

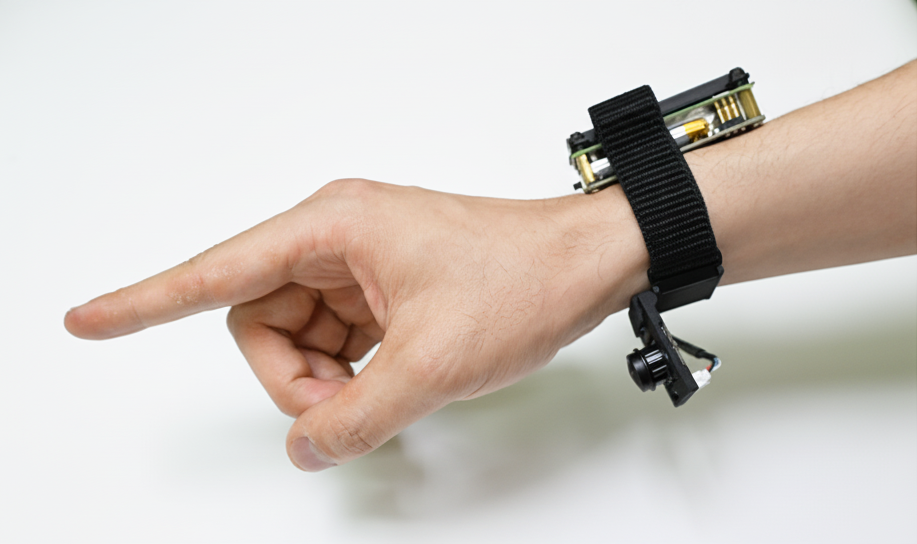

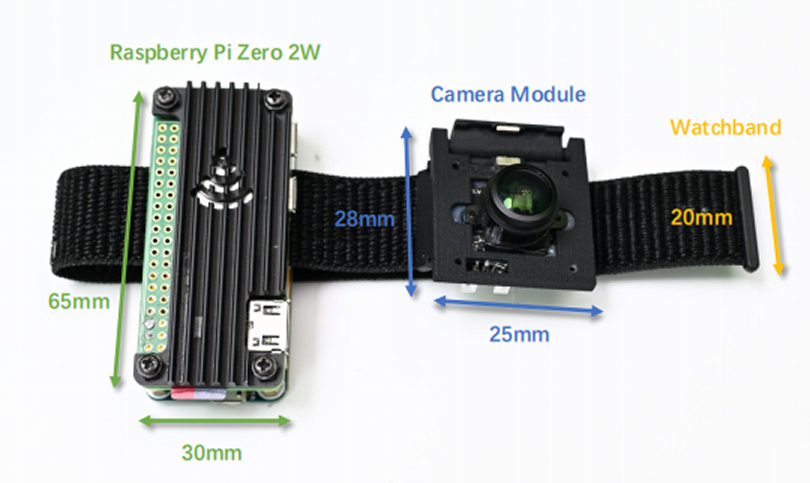

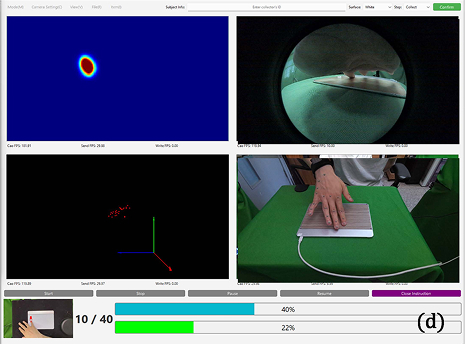

Why start this project? Because I believe that future interactions should go far beyond just more foldable and thinner screens. With the advancement of AI technology, the ideal interaction should be like in the Stone Age—starting from human basic intentions and capabilities, allowing computers to understand our subtle emotions and movements. I want to be a definer of new interaction paradigms in the new era, not a follower.

What is your role in the team? I am a co-founder, and the technology is my patent. Our team currently consists of seven people, all of whom, except me, are PhDs from Tsinghua University.

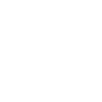

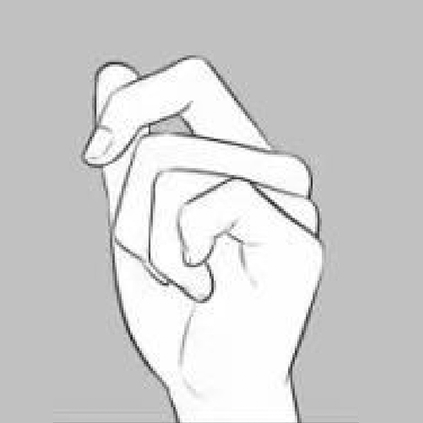

What applications does your startup product have? We are developing a foundational product, and later we will release an SDK for developers to create a wide variety of functions. For example: in engineering, it can be paired with dexterous hands for remote control; in entertainment, there will be more interactive and immersive games; in future living, it can be applied to smart homes—waving to open or close curtains, snapping fingers to turn lights on or off, and so on... We believe this will be a disruption to existing interaction paradigms.

How far has the startup progressed, and what are the results? We have currently completed angel-round financing, raising approximately $1.4 million, with a company valuation of about $6.4 million. After our related articles are published and patents registered, we will open-source the project directly and welcome feedback and corrections at that time.